I self-host services on a beefy server in a datacenter. Every night, Kopia performs a backup of my volumes and sends the result to a s3 bucket in Scaleway's Parisian datacenter.

The VPS is expensive, and I want to move my services to a Raspberry Pi at home. Before actually moving the services I wanted to see how the Raspberry Pi would handle them with real life data. To do so, I downloaded kopia on the Raspberry Pi, connected it to the my s3 bucket in Scaleway's datacenter, and attempted to restore the data from a snapshot of a 2.8GB volume.

thib@tinykube:~ $ kopia restore k1669883ce6d009e53352fddeb004a73a

Restoring to local filesystem (/tmp/snapshot-mount/k1669883ce6d009e53352fddeb004a73a) with parallelism=8...

Processed 395567 (3.6 KB) of 401786 (284.4 MB) 13.2 B/s (0.0%) remaining 6000h36m1s.

A restore time in Bytes pers second? It would take 6000h, that is 250 days, to transfer 2.8GB from a s3 bucket to the Raspberry Pi in my living room? Put differently, it means I can't restore backups to my Raspberry Pi, making it unfit for production as a homelab server in its current state.

Let's try to understand what happens, and if I can do anything about it.

The set-up

Let's list all the ingredients we have:

- A beefy VPS (16 vCPU, 48 GB of RAM, 1 TB SSD) in a German datacenter

- A Raspberry Pi 4 (8 GB of RAM) in my living room, booting from an encrypted drive to avoid data leaks in case of burglary. That NVMe disk is connected to the Raspberry Pi via a USB 3 enclosure.

- A s3 bucket that the VPS pushes to, and that the Rasperry Pi pulls from

- A fiber Internet connection for the Raspberry Pi to download data

Where the problem can come from

Two computers and a cloud s3 bucket look like it's fairly simple, but plenty of things can fail or be slow already! Let's list them and check if the problem could come from there.

Network could be slow

I have a fiber plan, but maybe my ISP lied to me, or maybe I'm using a poor quality ethernet cable to connect my Raspberry Pi to my router. Let's do a simple test by installing Ookla's speedtest CLI on the Pi.

I can list the nearest servers

thib@tinykube:~ $ speedtest -L

Closest servers:

ID Name Location Country

==============================================================================

67843 Syxpi Les Mureaux France

67628 LaNetCie Paris France

63829 EUTELSAT COMMUNICATIONS SA Paris France

62493 ORANGE FRANCE Paris France

61933 Scaleway Paris France

27961 KEYYO Paris France

24130 Sewan Paris France

28308 Axione Paris France

52534 Virtual Technologies and Solutions Paris France

62035 moji Paris France

41840 Telerys Communication Paris France

Happy surprise, Scaleway, my s3 bucket provider, is among the test servers! Let's give it a go

thib@tinykube:~ $ speedtest -s 61933

[...]

Speedtest by Ookla

Server: Scaleway - Paris (id: 61933)

ISP: Free SAS

Idle Latency: 12.51 ms (jitter: 0.47ms, low: 12.09ms, high: 12.82ms)

Download: 932.47 Mbps (data used: 947.9 MB)

34.24 ms (jitter: 4.57ms, low: 12.09ms, high: 286.97ms)

Upload: 907.77 Mbps (data used: 869.0 MB)

25.42 ms (jitter: 1.85ms, low: 12.33ms, high: 40.68ms)

Packet Loss: 0.0%

With a download speed of 900 Mb/s ≈ 112 MB/s between Scaleway and my Raspberry Pi, it looks like the network is not the core issue.

The s3 provider could have an incident

I could test that the network itself is not to blame, but I don't know exactly what is being downloaded and from what server. Maybe Scaleway's s3 platform itself has an issue and is slow?

Let's use aws-cli to just pull the data from the bucket without performing any kind of operation on it. Scaleway provides detailed instructions about how to use aws-cli with their services. After following it, I can download a copy of my s3 bucket on the encrypted disk attached to my Raspberry Pi with

thib@tinykube:~ $ aws s3 sync s3://ergaster-backup/ /tmp/s3 \

--endpoint-url https://s3.fr-par.scw.cloud

It downloads at a speed of 1 to 2 MB/s. Very far from what I would expect. It could be tempting to stop here and think Scaleway is unjustly throttling my specific bucket. But more things could actually be happening.

Like most providers, Scaleway has egress fees. In other words, they bill customers who pull data out of their s3 buckets. It means that if I'm going to do extensive testing, I will end up with a significant bill. I've let the sync command finish overnight so I could have a local copy of my bucket on my Raspberry Pi's encrypted disk.

After it's done, I can disconnect kopia from my s3 bucket with

thib@tinykube:~ $ kopia repository disconnect

And I can connect it to the local copy of my bucket with

thib@tinykube:~ $ kopia repository connect filesystem \

--path=/tmp/s3

Attempting to restoring a snapshot gives me the same terrible speed as earlier. Something is up with the restore operation specifically. Let's try to understand what happens.

Kopia could be slow to extract data

Kopia performs incremental, encrypted, compressed backups to a repository. There's a lot information packed in this single sentence, so let's break it down.

How kopia does backups

When performing a first snapshot of a directory, Kopia doesn't just upload files as it finds them. Instead if splits the files into small chunks, all of the same size on average. It computes a hash for each of them, that will serve as an unique identifier. It writes in a index table which block (identified by a hash) belongs to which file in which snapshot. And finally, it compresses, encrypts, and uploads them to the repository.

When performing a second snapshot, instead of just uploading all the files again, kopia performs the same file splitting operation. It hashes each block again, looks up in the index table if the hash is already present. If that's the case, it means the corresponding chunk has already been backed up and doesn't need to be re-uploaded. If not, it writes the hash to the table, compresses and encrypts the new chunk, and sends it to the repository.

Splitting the files and computing a hash for the chunks allows kopia to only send the data that has changed, even in large files, instead of uploading whole directories.

The algorithm to split the files in small chunks is called a splitter. The algorithm to compute a hash for each chunk is called... a hash.

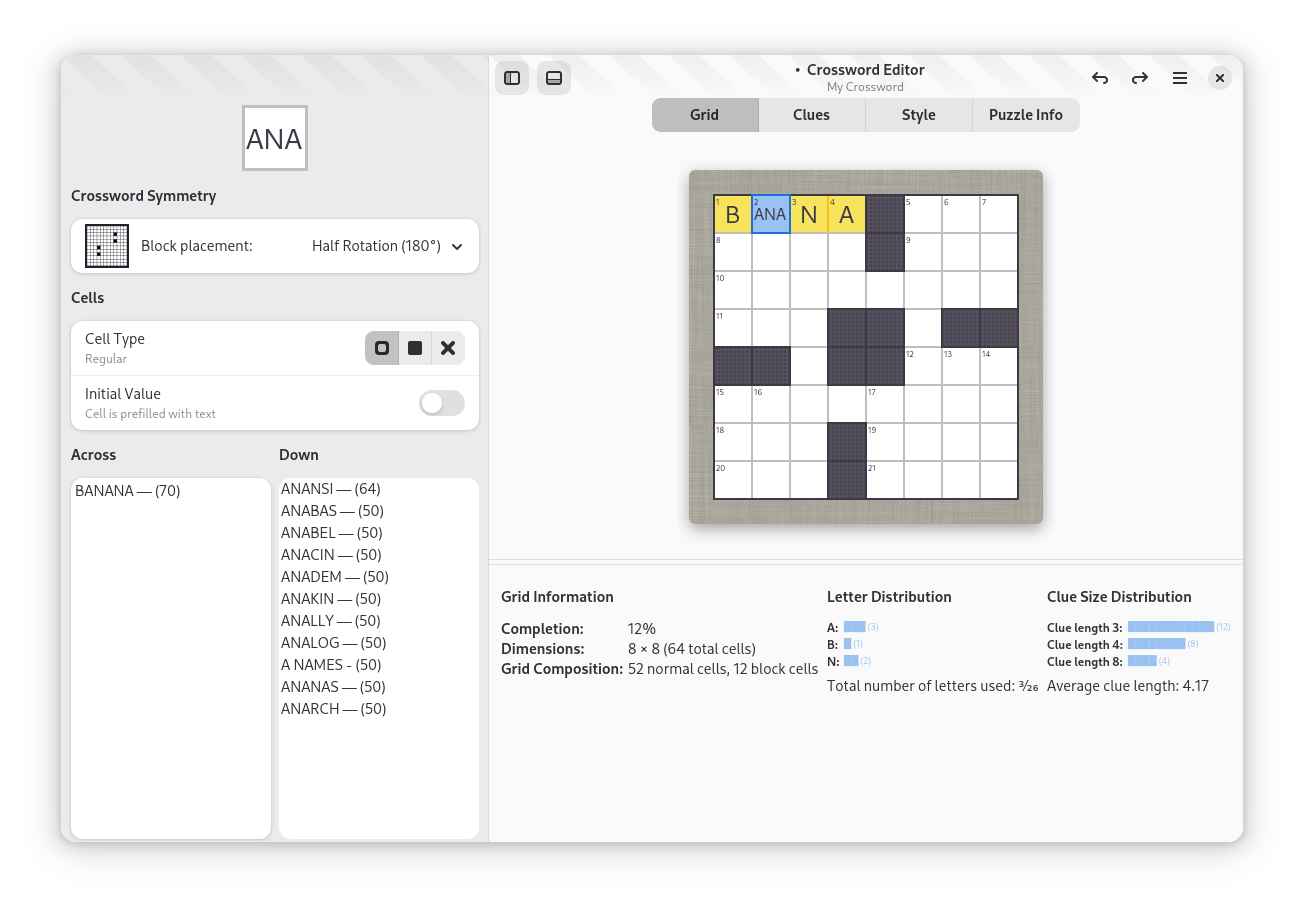

Kopia supports several splitters, several hash algorithms, several encryption algorithms, and several compression algorithms. Different processors have different optimizations and will perform more or less well, which is why kopia offers to pick between several splitters, hash, and compression algorithms.

The splitter, hash and encryption algorithms are defined per repository, when the repository is created. These algorithms cannot be changed after the repository has been created. After connecting a repository, the splitter and hash can be determined with

thib@tinykube:~ $ kopia repository status

Config file: /home/thib/.config/kopia/repository.config

Description: Repository in Filesystem: /tmp/kopia

Hostname: tinykube

Username: thib

Read-only: false

Format blob cache: 15m0s

Storage type: filesystem

Storage capacity: 1 TB

Storage available: 687.5 GB

Storage config: {

"path": "/tmp/kopia",

"fileMode": 384,

"dirMode": 448,

"dirShards": null

}

Unique ID: e1cf6b0c746b932a0d9b7398744968a14456073c857e7c2f2ca12b3ea036d33e

Hash: BLAKE2B-256-128

Encryption: AES256-GCM-HMAC-SHA256

Splitter: DYNAMIC-4M-BUZHASH

Format version: 2

Content compression: true

Password changes: true

Max pack length: 21 MB

Index Format: v2

Epoch Manager: enabled

Current Epoch: 465

Epoch refresh frequency: 20m0s

Epoch advance on: 20 blobs or 10.5 MB, minimum 24h0m0s

Epoch cleanup margin: 4h0m0s

Epoch checkpoint every: 7 epochs

The compression algorithm is defined by a kopia policy. By default kopia doesn't apply any compression.

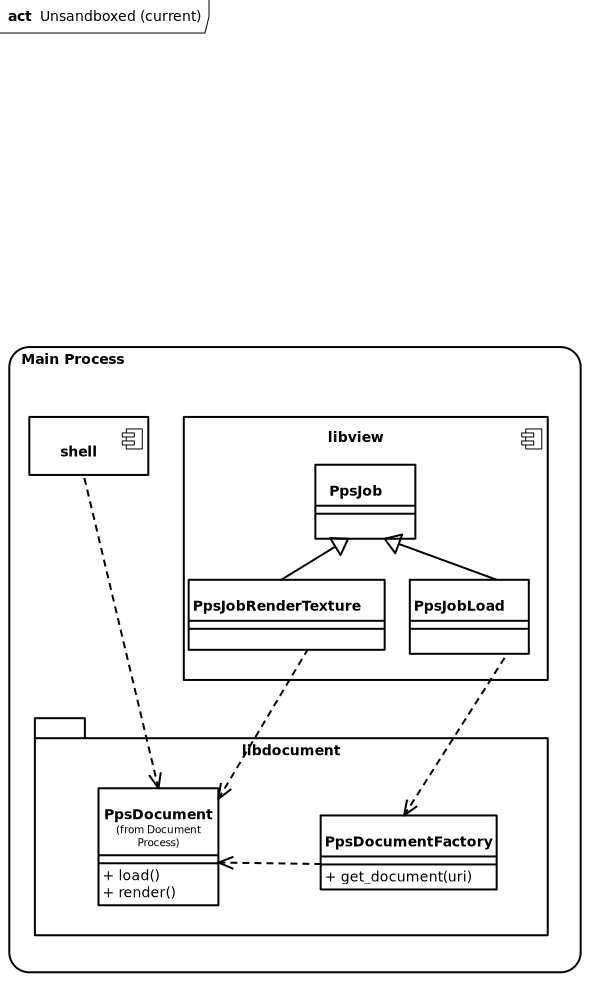

How kopia restores data

When kopia is instructed to restore data from a snapshot, it looks up the index table to figure out what chunks it must retrieve. It decrypts them, then decompresses them if they were compressed, and appends the relevant chunks together to reconstruct the files.

Kopia doesn't rely on the splitter and hash algorithms when performing a restore, but it relies on the encryption and compression ones.

Figuring out the theoretical speed

Kopia has built in benchmarks to let you figure out what are the best hash and encryption algorithms to use for your machine. I'm trying to understand why the restore operation is slow, so I only need to know about what I can expect from the encryption algorithms.

thib@tinykube:~ $ kopia benchmark encryption

Benchmarking encryption 'AES256-GCM-HMAC-SHA256'... (1000 x 1048576 bytes, parallelism 1)

Benchmarking encryption 'CHACHA20-POLY1305-HMAC-SHA256'... (1000 x 1048576 bytes, parallelism 1)

Encryption Throughput

-----------------------------------------------------------------

0. CHACHA20-POLY1305-HMAC-SHA256 173.3 MB / second

1. AES256-GCM-HMAC-SHA256 27.6 MB / second

-----------------------------------------------------------------

Fastest option for this machine is: --encryption=CHACHA20-POLY1305-HMAC-SHA256

The Raspberry Pi is notorious for not being excellent with encryption algorithms. The kopia repository was created from my VPS, a machine with much better results with AES. Running the same benchmark on my VPS gives much different results.

[thib@ergaster ~]$ kopia benchmark encryption

Benchmarking encryption 'AES256-GCM-HMAC-SHA256'... (1000 x 1048576 bytes, parallelism 1)

Benchmarking encryption 'CHACHA20-POLY1305-HMAC-SHA256'... (1000 x 1048576 bytes, parallelism 1)

Encryption Throughput

-----------------------------------------------------------------

0. AES256-GCM-HMAC-SHA256 2.1 GB / second

1. CHACHA20-POLY1305-HMAC-SHA256 699.1 MB / second

-----------------------------------------------------------------

Fastest option for this machine is: --encryption=AES256-GCM-HMAC-SHA256

Given that the repository I try to perform a restore from does not use compression and that it uses the AES256 encryption algorithm, I should expect a restore speed of 27.6 MB/s on the Raspberry Pi. So why is the restore so slow? Let's keep chasing the performance bottleneck.

The disk could be slow

The hardware

The Raspberry Pi is a brave little machine, but it was obviously not designed as a home lab server. The sd cards it usually boots from are notorious for being fragile and not supporting I/O intensive operations.

A common solution is to make the Raspberry Pi boot from a SSD drive. But to connect this kind of disk to the Raspberry Pi 4, you need an USB enclosure. I bought a Kingston SNV3S/1000G NVMe drive. It supposedly can read and write at 6 GB/s and 5 GB/s respectively. I put that drive an ICY BOX IB-1817M-C31 enclosure, with a maximum theoretical speed of 1000 MB/s.

According to this thread on the Raspberry Pi forums, the USB controller of the Pi has a bandwidth of 4Gb/s ≈ 512 MB/s (and not 4 GB/s as I initially wrote. Thanks baobun on hackernews for pointing out my mistake!) shared across all 4 ports. Since I only plug my disk there, it should get all the bandwidth.

So the limiting factor is the enclosure, that should still give me a generous 1000 MB/s.

So the limiting factor is the USB controller of the Raspberry Pi, that should still give me about 512 MB/s, although baobun on hackernews also pointed out that the USB controller on the Pi might share a bus with the network card.

Let's see how close to the reality that is.

Disk sequential read speed

First, let's try with a gentle sequential read test to see how well it performs in ideal conditions.

thib@tinykube:~ $ fio --name TEST --eta-newline=5s --filename=temp.file --rw=read --size=2g --io_size=10g --blocksize=1024k --ioengine=libaio --fsync=10000 --iodepth=32 --direct=1 --numjobs=1 --runtime=60 --group_reporting

TEST: (g=0): rw=read, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=libaio, iodepth=32

fio-3.33

Starting 1 process

Jobs: 1 (f=1): [R(1)][11.5%][r=144MiB/s][r=144 IOPS][eta 00m:54s]

Jobs: 1 (f=1): [R(1)][19.7%][r=127MiB/s][r=126 IOPS][eta 00m:49s]

Jobs: 1 (f=1): [R(1)][27.9%][r=151MiB/s][r=151 IOPS][eta 00m:44s]

Jobs: 1 (f=1): [R(1)][36.1%][r=100MiB/s][r=100 IOPS][eta 00m:39s]

Jobs: 1 (f=1): [R(1)][44.3%][r=111MiB/s][r=111 IOPS][eta 00m:34s]

Jobs: 1 (f=1): [R(1)][53.3%][r=106MiB/s][r=105 IOPS][eta 00m:28s]

Jobs: 1 (f=1): [R(1)][61.7%][r=87.1MiB/s][r=87 IOPS][eta 00m:23s]

Jobs: 1 (f=1): [R(1)][70.0%][r=99.9MiB/s][r=99 IOPS][eta 00m:18s]

Jobs: 1 (f=1): [R(1)][78.3%][r=121MiB/s][r=121 IOPS][eta 00m:13s]

Jobs: 1 (f=1): [R(1)][86.7%][r=96.0MiB/s][r=96 IOPS][eta 00m:08s]

Jobs: 1 (f=1): [R(1)][95.0%][r=67.1MiB/s][r=67 IOPS][eta 00m:03s]

Jobs: 1 (f=1): [R(1)][65.6%][r=60.8MiB/s][r=60 IOPS][eta 00m:32s]

TEST: (groupid=0, jobs=1): err= 0: pid=3666160: Thu Jun 12 20:14:33 2025

read: IOPS=111, BW=112MiB/s (117MB/s)(6739MiB/60411msec)

slat (usec): min=133, max=41797, avg=3396.01, stdev=3580.27

clat (msec): min=12, max=1061, avg=281.85, stdev=140.49

lat (msec): min=14, max=1065, avg=285.25, stdev=140.68

clat percentiles (msec):

| 1.00th=[ 41], 5.00th=[ 86], 10.00th=[ 130], 20.00th=[ 171],

| 30.00th=[ 218], 40.00th=[ 245], 50.00th=[ 271], 60.00th=[ 296],

| 70.00th=[ 317], 80.00th=[ 355], 90.00th=[ 435], 95.00th=[ 550],

| 99.00th=[ 793], 99.50th=[ 835], 99.90th=[ 969], 99.95th=[ 1020],

| 99.99th=[ 1062]

bw ( KiB/s): min=44521, max=253445, per=99.92%, avg=114140.83, stdev=31674.32, samples=120

iops : min= 43, max= 247, avg=111.18, stdev=30.90, samples=120

lat (msec) : 20=0.07%, 50=1.69%, 100=4.94%, 250=35.79%, 500=50.73%

lat (msec) : 750=5.24%, 1000=1.45%, 2000=0.07%

cpu : usr=0.66%, sys=21.39%, ctx=7650, majf=0, minf=8218

IO depths : 1=0.1%, 2=0.1%, 4=0.2%, 8=0.5%, 16=0.9%, 32=98.2%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=99.9%, 8=0.0%, 16=0.0%, 32=0.1%, 64=0.0%, >=64=0.0%

issued rwts: total=6739,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

READ: bw=112MiB/s (117MB/s), 112MiB/s-112MiB/s (117MB/s-117MB/s), io=6739MiB (7066MB), run=60411-60411msec

Disk stats (read/write):

dm-0: ios=53805/810, merge=0/0, ticks=14465332/135892, in_queue=14601224, util=100.00%, aggrios=13485/943, aggrmerge=40434/93, aggrticks=84110/2140, aggrin_queue=86349, aggrutil=36.32%

sda: ios=13485/943, merge=40434/93, ticks=84110/2140, in_queue=86349, util=36.32%

So I can read from my disk at 117 MB/s. We're far from the theoretical 1000 MB/s. One thing is interesting here. The read performance seems to decrease over time? Running the same test again with htop to monitor what happens, I can see even more surprising. Not only the speed remains slower, but all four CPUs are pegging.

So when performing a disk read test, the CPU is going to maximum capacity, with a wait metric of about 0%. So the CPU is not waiting for the disk. Why would my CPU go crazy when just reading from disk? Oh. Oh no. The Raspberry Pi performs poorly with encryption. I am trying to read from an encrypted drive. This is why even with this simple reading test my CPU is a bottleneck.

Disk random read/write speed

Let's run the test that this wiki describes as "will show the absolute worst I/O performance you can expect."

thib@tinykube:~ $ fio --name TEST --eta-newline=5s --filename=temp.file --rw=randrw --size=2g --io_size=10g --blocksize=4k --ioengine=libaio --fsync=1 --iodepth=1 --direct=1 --numjobs=32 --runtime=60 --group_reporting

[...]

Run status group 0 (all jobs):

READ: bw=6167KiB/s (6315kB/s), 6167KiB/s-6167KiB/s (6315kB/s-6315kB/s), io=361MiB (379MB), run=60010-60010msec

WRITE: bw=6167KiB/s (6315kB/s), 6167KiB/s-6167KiB/s (6315kB/s-6315kB/s), io=361MiB (379MB), run=60010-60010msec

Disk stats (read/write):

dm-0: ios=92343/185391, merge=0/0, ticks=90656/620960, in_queue=711616, util=95.25%, aggrios=92527/182570, aggrmerge=0/3625, aggrticks=65580/207873, aggrin_queue=319891, aggrutil=55.65%

sda: ios=92527/182570, merge=0/3625, ticks=65580/207873, in_queue=319891, util=55.65%

In the worst conditions, I can expect a read and write speed of 6 MB/s each.

The situation must be even worse when trying to restore my backups with kopia: I read an encrypted repository from an encrypted disk and try to write data on the same encrypted disk. Let's open htop and perform a kopia restore to confirm that the CPU is blocking, and that I'm not waiting for my disk.

htop seems to confirm that intuition: it looks like the bottleneck when trying to restore a kopia backup on my Raspberry Pi is its CPU.

Let's test with an unencrypted disk to see if that hypothesis holds. I should expect higher restore speeds because the CPU will not be busy decrypting/encrypting data to disk, but it will still be busy decrypting data from the kopia repository.

Testing it all

I've flashed a clean Rasbperry Pi OS Lite image onto a sdcard, and booted from it. Using fdisk and mkfs.ext4 I can format the encrypted drive the Raspberry Pi was previously booting from into a clean, unencrypted drive.

I then create a mount point for the disk, mount it, and change the ownership to my user thib.

thib@tinykube:~ $ sudo mkdir /mnt/icy

thib@tinykube:~ $ sudo mount /dev/sda1 /mnt/icy

thib@tinykube:~ $ sudo chown -R thib:thib /mnt/icy

I can now perform my tests, not forgetting to change the --filename parameter to /mnt/icy/temp.file so the benchmarks is performed on the disk and not on the sd card.

Unencrypted disk performance

Sequential read speed

I can then run the sequential read test from the mounted disk

thib@tinykube:~ $ fio --name TEST --eta-newline=5s --filename=/mnt/icy/temp.file --rw=read --size=2g --io_size=10g --blocksize=1024k --ioengine=libaio --fsync=10000 --iodepth=32 --direct=1 --numjobs=1 --runtime=60 --group_reporting

TEST: (g=0): rw=read, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=libaio, iodepth=32

TEST: (g=0): rw=read, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=libaio, iodepth=32

fio-3.33

Starting 1 process

TEST: Laying out IO file (1 file / 2048MiB)

Jobs: 1 (f=1): [R(1)][19.4%][r=333MiB/s][r=333 IOPS][eta 00m:29s]

Jobs: 1 (f=1): [R(1)][36.4%][r=333MiB/s][r=332 IOPS][eta 00m:21s]

Jobs: 1 (f=1): [R(1)][53.1%][r=333MiB/s][r=332 IOPS][eta 00m:15s]

Jobs: 1 (f=1): [R(1)][68.8%][r=333MiB/s][r=332 IOPS][eta 00m:10s]

Jobs: 1 (f=1): [R(1)][87.1%][r=332MiB/s][r=332 IOPS][eta 00m:04s]

Jobs: 1 (f=1): [R(1)][100.0%][r=334MiB/s][r=333 IOPS][eta 00m:00s]

TEST: (groupid=0, jobs=1): err= 0: pid=14807: Sun Jun 15 11:58:14 2025

read: IOPS=333, BW=333MiB/s (349MB/s)(10.0GiB/30733msec)

slat (usec): min=83, max=56105, avg=2967.97, stdev=10294.97

clat (msec): min=28, max=144, avg=92.78, stdev=16.27

lat (msec): min=30, max=180, avg=95.75, stdev=18.44

clat percentiles (msec):

| 1.00th=[ 71], 5.00th=[ 78], 10.00th=[ 80], 20.00th=[ 83],

| 30.00th=[ 86], 40.00th=[ 88], 50.00th=[ 88], 60.00th=[ 90],

| 70.00th=[ 93], 80.00th=[ 97], 90.00th=[ 126], 95.00th=[ 131],

| 99.00th=[ 140], 99.50th=[ 142], 99.90th=[ 144], 99.95th=[ 144],

| 99.99th=[ 144]

bw ( KiB/s): min=321536, max=363816, per=99.96%, avg=341063.31, stdev=14666.91, samples=61

iops : min= 314, max= 355, avg=333.02, stdev=14.31, samples=61

lat (msec) : 50=0.61%, 100=83.42%, 250=15.98%

cpu : usr=0.31%, sys=18.80%, ctx=1173, majf=0, minf=8218

IO depths : 1=0.1%, 2=0.1%, 4=0.2%, 8=0.4%, 16=0.8%, 32=98.5%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.1%, 64=0.0%, >=64=0.0%

issued rwts: total=10240,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

READ: bw=333MiB/s (349MB/s), 333MiB/s-333MiB/s (349MB/s-349MB/s), io=10.0GiB (10.7GB), run=30733-30733msec

Disk stats (read/write):

sda: ios=20359/2, merge=0/1, ticks=1622783/170, in_queue=1622998, util=82.13%

I can read from that disk at a speed of about 350 MB/s. Looking at htop while the reading test is being performed paints a much different picture as compared to when the drive was encrypted

I can see that the CPU is not very busy, and the wait time is well beyond 10%. Unsurprisingly this time, when testing what is the max read capacity for the risk the bottleneck is the disk.

Sequential write speed

thib@tinykube:~ $ fio --name TEST --eta-newline=5s --filename=/mnt/icy/temp.file --rw=write --size=2g --io_size=10g --blocksize=1024k --ioengine=libaio --fsync=10000 --iodepth=32 --direct=1 --numjobs=1 --runtime=60 --group_reporting

TEST: (g=0): rw=write, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=libaio, iodepth=32

fio-3.33

Starting 1 process

TEST: Laying out IO file (1 file / 2048MiB)

Jobs: 1 (f=1): [W(1)][12.5%][w=319MiB/s][w=318 IOPS][eta 00m:49s]

Jobs: 1 (f=1): [W(1)][28.6%][w=319MiB/s][w=318 IOPS][eta 00m:30s]

Jobs: 1 (f=1): [W(1)][44.7%][w=319MiB/s][w=318 IOPS][eta 00m:21s]

Jobs: 1 (f=1): [W(1)][59.5%][w=319MiB/s][w=318 IOPS][eta 00m:15s]

Jobs: 1 (f=1): [W(1)][75.0%][w=318MiB/s][w=318 IOPS][eta 00m:09s]

Jobs: 1 (f=1): [W(1)][91.4%][w=320MiB/s][w=319 IOPS][eta 00m:03s]

Jobs: 1 (f=1): [W(1)][100.0%][w=312MiB/s][w=311 IOPS][eta 00m:00s]

TEST: (groupid=0, jobs=1): err= 0: pid=15551: Sun Jun 15 12:19:37 2025

write: IOPS=300, BW=300MiB/s (315MB/s)(10.0GiB/34116msec); 0 zone resets

slat (usec): min=156, max=1970.0k, avg=3244.94, stdev=19525.85

clat (msec): min=18, max=2063, avg=102.64, stdev=103.41

lat (msec): min=19, max=2066, avg=105.89, stdev=105.10

clat percentiles (msec):

| 1.00th=[ 36], 5.00th=[ 96], 10.00th=[ 97], 20.00th=[ 97],

| 30.00th=[ 97], 40.00th=[ 97], 50.00th=[ 97], 60.00th=[ 97],

| 70.00th=[ 97], 80.00th=[ 97], 90.00th=[ 101], 95.00th=[ 101],

| 99.00th=[ 169], 99.50th=[ 182], 99.90th=[ 2039], 99.95th=[ 2056],

| 99.99th=[ 2056]

bw ( KiB/s): min= 6144, max=329728, per=100.00%, avg=321631.80, stdev=39791.66, samples=65

iops : min= 6, max= 322, avg=314.08, stdev=38.86, samples=65

lat (msec) : 20=0.05%, 50=1.89%, 100=88.33%, 250=9.44%, 2000=0.04%

lat (msec) : >=2000=0.24%

fsync/fdatasync/sync_file_range:

sync (nsec): min=189719k, max=189719k, avg=189718833.00, stdev= 0.00

sync percentiles (msec):

| 1.00th=[ 190], 5.00th=[ 190], 10.00th=[ 190], 20.00th=[ 190],

| 30.00th=[ 190], 40.00th=[ 190], 50.00th=[ 190], 60.00th=[ 190],

| 70.00th=[ 190], 80.00th=[ 190], 90.00th=[ 190], 95.00th=[ 190],

| 99.00th=[ 190], 99.50th=[ 190], 99.90th=[ 190], 99.95th=[ 190],

| 99.99th=[ 190]

cpu : usr=7.25%, sys=11.37%, ctx=22027, majf=0, minf=26

IO depths : 1=0.1%, 2=0.1%, 4=0.2%, 8=0.4%, 16=0.8%, 32=98.5%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.1%, 64=0.0%, >=64=0.0%

issued rwts: total=0,10240,0,1 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

WRITE: bw=300MiB/s (315MB/s), 300MiB/s-300MiB/s (315MB/s-315MB/s), io=10.0GiB (10.7GB), run=34116-34116msec

Disk stats (read/write):

sda: ios=0/20481, merge=0/47, ticks=0/1934829, in_queue=1935035, util=88.80%

I now know I can write at about 300 MB/s on that unencrypted disk. Looking at htop while the test was running, I also know that the disk is the bottleneck and not the CPU.

Random read/write speed

Let's run the "worst performance test" again from the unencrypted disk.

thib@tinykube:~ $ fio --name TEST --eta-newline=5s --filename=/mnt/icy/temp.file --rw=randrw --size=2g --io_size=10g --blocksize=4k --ioengine=libaio --fsync=1 --iodepth=1 --direct=1 --numjobs=32 --runtime=60 --group_reporting

TEST: (g=0): rw=randrw, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=1

...

fio-3.33

Starting 32 processes

TEST: Laying out IO file (1 file / 2048MiB)

Jobs: 32 (f=32): [m(32)][13.1%][r=10.5MiB/s,w=10.8MiB/s][r=2677,w=2773 IOPS][eta 00m:53s]

Jobs: 32 (f=32): [m(32)][23.0%][r=11.0MiB/s,w=11.0MiB/s][r=2826,w=2819 IOPS][eta 00m:47s]

Jobs: 32 (f=32): [m(32)][32.8%][r=10.9MiB/s,w=11.5MiB/s][r=2780,w=2937 IOPS][eta 00m:41s]

Jobs: 32 (f=32): [m(32)][42.6%][r=10.8MiB/s,w=11.0MiB/s][r=2775,w=2826 IOPS][eta 00m:35s]

Jobs: 32 (f=32): [m(32)][52.5%][r=10.9MiB/s,w=11.3MiB/s][r=2787,w=2886 IOPS][eta 00m:29s]

Jobs: 32 (f=32): [m(32)][62.3%][r=11.3MiB/s,w=11.6MiB/s][r=2901,w=2967 IOPS][eta 00m:23s]

Jobs: 32 (f=32): [m(32)][72.1%][r=11.4MiB/s,w=11.5MiB/s][r=2908,w=2942 IOPS][eta 00m:17s]

Jobs: 32 (f=32): [m(32)][82.0%][r=11.6MiB/s,w=11.7MiB/s][r=2960,w=3004 IOPS][eta 00m:11s]

Jobs: 32 (f=32): [m(32)][91.8%][r=11.0MiB/s,w=11.2MiB/s][r=2815,w=2861 IOPS][eta 00m:05s]

Jobs: 32 (f=32): [m(32)][100.0%][r=11.0MiB/s,w=10.5MiB/s][r=2809,w=2700 IOPS][eta 00m:00s]

TEST: (groupid=0, jobs=32): err= 0: pid=14830: Sun Jun 15 12:05:54 2025

read: IOPS=2797, BW=10.9MiB/s (11.5MB/s)(656MiB/60004msec)

slat (usec): min=14, max=1824, avg=88.06, stdev=104.92

clat (usec): min=2, max=7373, avg=939.12, stdev=375.40

lat (usec): min=130, max=7479, avg=1027.18, stdev=360.39

clat percentiles (usec):

| 1.00th=[ 6], 5.00th=[ 180], 10.00th=[ 285], 20.00th=[ 644],

| 30.00th=[ 889], 40.00th=[ 971], 50.00th=[ 1037], 60.00th=[ 1090],

| 70.00th=[ 1156], 80.00th=[ 1221], 90.00th=[ 1319], 95.00th=[ 1385],

| 99.00th=[ 1532], 99.50th=[ 1614], 99.90th=[ 1811], 99.95th=[ 1926],

| 99.99th=[ 6587]

bw ( KiB/s): min= 8062, max=14560, per=100.00%, avg=11198.39, stdev=39.34, samples=3808

iops : min= 2009, max= 3640, avg=2793.55, stdev= 9.87, samples=3808

write: IOPS=2806, BW=11.0MiB/s (11.5MB/s)(658MiB/60004msec); 0 zone resets

slat (usec): min=15, max=2183, avg=92.95, stdev=108.34

clat (usec): min=2, max=7118, avg=850.19, stdev=310.22

lat (usec): min=110, max=8127, avg=943.13, stdev=312.58

clat percentiles (usec):

| 1.00th=[ 6], 5.00th=[ 174], 10.00th=[ 302], 20.00th=[ 668],

| 30.00th=[ 832], 40.00th=[ 889], 50.00th=[ 938], 60.00th=[ 988],

| 70.00th=[ 1020], 80.00th=[ 1057], 90.00th=[ 1123], 95.00th=[ 1172],

| 99.00th=[ 1401], 99.50th=[ 1532], 99.90th=[ 1745], 99.95th=[ 1844],

| 99.99th=[ 2147]

bw ( KiB/s): min= 8052, max=14548, per=100.00%, avg=11234.02, stdev=40.18, samples=3808

iops : min= 2004, max= 3634, avg=2802.45, stdev=10.08, samples=3808

lat (usec) : 4=0.26%, 10=1.50%, 20=0.08%, 50=0.14%, 100=0.42%

lat (usec) : 250=5.89%, 500=7.98%, 750=6.66%, 1000=31.11%

lat (msec) : 2=45.93%, 4=0.01%, 10=0.01%

fsync/fdatasync/sync_file_range:

sync (usec): min=1323, max=17158, avg=5610.76, stdev=1148.23

sync percentiles (usec):

| 1.00th=[ 3195], 5.00th=[ 4228], 10.00th=[ 4490], 20.00th=[ 4686],

| 30.00th=[ 4883], 40.00th=[ 5080], 50.00th=[ 5342], 60.00th=[ 5604],

| 70.00th=[ 6128], 80.00th=[ 6718], 90.00th=[ 7177], 95.00th=[ 7570],

| 99.00th=[ 8717], 99.50th=[ 9241], 99.90th=[ 9896], 99.95th=[10552],

| 99.99th=[15401]

cpu : usr=0.51%, sys=2.25%, ctx=1006148, majf=0, minf=977

IO depths : 1=200.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=167837,168384,0,336200 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=1

Run status group 0 (all jobs):

READ: bw=10.9MiB/s (11.5MB/s), 10.9MiB/s-10.9MiB/s (11.5MB/s-11.5MB/s), io=656MiB (687MB), run=60004-60004msec

WRITE: bw=11.0MiB/s (11.5MB/s), 11.0MiB/s-11.0MiB/s (11.5MB/s-11.5MB/s), io=658MiB (690MB), run=60004-60004msec

Disk stats (read/write):

sda: ios=167422/311772, merge=0/14760, ticks=153900/409024, in_queue=615762, util=81.63%

The read and write performance is much worse than I expected, only a few MB/s above the same test on the encrypted drive. But here again, htop tells us that the disk is the bottleneck, and not the CPU.

Copying the bucket

I now know that my disk can read or write at a maximum speed of about 300 MB/s. Let's sync the repository again from Scaleway s3.

thib@tinykube:~ $ aws s3 sync s3://ergaster-backup/ /mnt/icy/s3 \

--endpoint-url https://s3.fr-par.scw.cloud

The aws CLI reports download speeds between 45 and 65 MB/s, much higher than the initial tests! Having a look at htop while the sync happens, I can see that the CPUs are not at full capacity, and that the i/o wait time is at 0%.

The metric that is has gone up though is si, that stands for softirqs. This paper and this StackOverflow answer explain what softirqs are. I understand the si metric from (h)top as "time the CPU spends to make the system's devices work." In this case, I believe this is time the CPU spends helping the network chip. If I'm wrong and you have a better explanation, please reach out at comments@ergaster.org!

Testing kopia's performance

Now for the final tests, let's first try to perform a restore from the AES-encrypted repository directly from the s3 bucket. Then, let's change the encryption algorithm of the repository and perform a restore.

Restoring from the s3 bucket

After connecting kopia to the repository my s3 bucket, I perform a tentative restore and...

thib@tinykube:/mnt/icy $ kopia restore k5a270ab7f4acf72d4c3830a58edd7106

Restoring to local filesystem (/mnt/icy/k5a270ab7f4acf72d4c3830a58edd7106) with parallelism=8...

Processed 94929 (102.9 GB) of 118004 (233.3 GB) 19.8 MB/s (44.1%) remaining 1h49m45s.

I'm reaching much higher speeds, closer to the theoretical 27.6 MB/s I got in my encryption benchmark! Looking at htop, I can see that the CPU remains the bottleneck when restoring. Those are decent speeds for a small and power efficient device like the Raspberry Pi, but this is not enough for me to use it in production.

The CPU is the limiting factor, and the Pi is busy exclusively doing a restore. If it was serving services in addition to that, the performance of the restore and of the services would degrade. We should be able to achieve better results by changing the encryption algorithm of the repository.

Re-encrypting the repository

Since the encryption algorithm can only be set when the repository is created, I need to create a new repository with the Chacha algorithm and ask kopia to decrypt the current repository encrypted with AES and re-encrypt its using Chacha.

The Pi performs so poorly with AES that it would take days to do so. I can do this operation on my beefy VPS and then transfer the repository data onto my Pi.

So on my VPS, I then connect to the s3 repo, passing it an option to dump the config in a special place

[thib@ergaster ~]$

Enter password to open repository:

Connected to repository.

NOTICE: Kopia will check for updates on GitHub every 7 days, starting 24 hours after first use.

To disable this behavior, set environment variable KOPIA_CHECK_FOR_UPDATES=false

Alternatively you can remove the file "/home/thib/old.config.update-info.json".

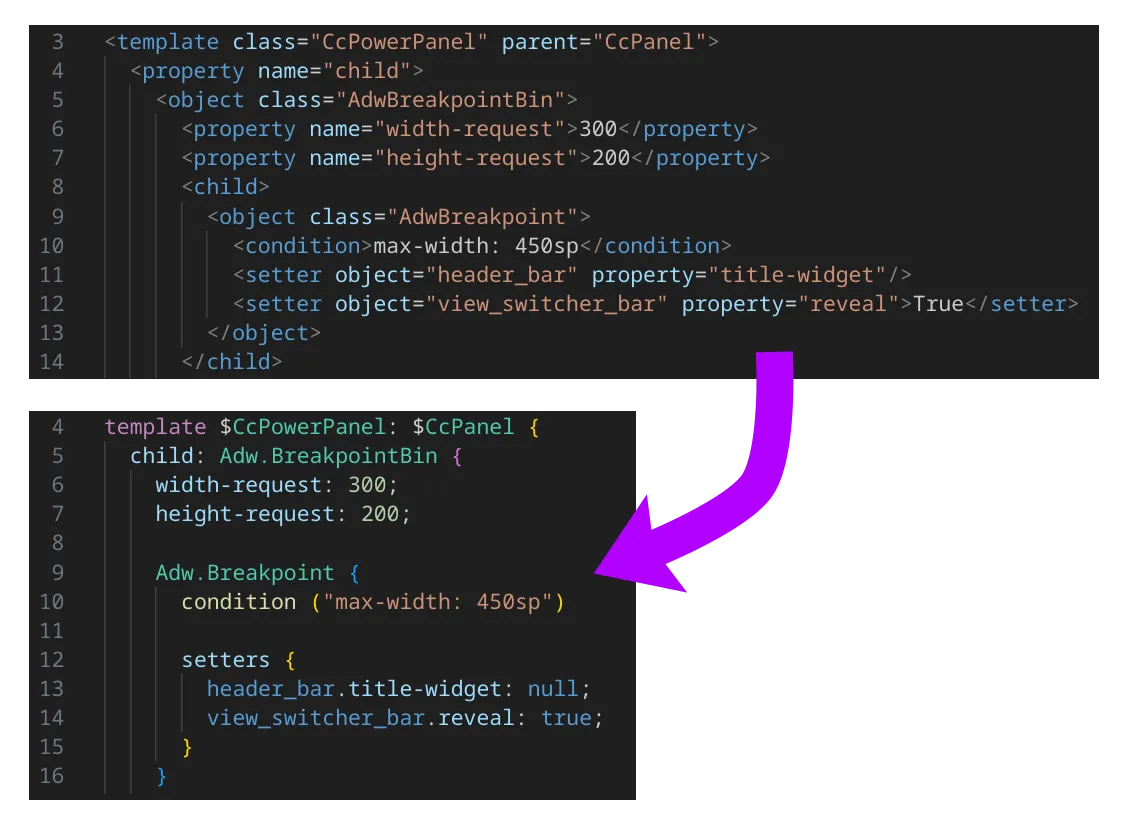

I then create a filesystem repo on my VPS, with the new encryption algorithm that is faster on the Pi

[thib@ergaster ~]$ kopia repo create filesystem \

--block-hash=BLAKE2B-256-128 \

--encryption=CHACHA20-POLY1305-HMAC-SHA256 \

--path=/home/thib/kopia_chacha

Enter password to create new repository:

Re-enter password for verification:

Initializing repository with:

block hash: BLAKE2B-256-128

encryption: CHACHA20-POLY1305-HMAC-SHA256

key derivation: scrypt-65536-8-1

splitter: DYNAMIC-4M-BUZHASH

Connected to repository.

And I can finally launch the migration to retrieve data from the s3 provider and migrate it locally.

[thib@ergaster ~]$ kopia snapshot migrate \

--all \

--source-config=/home/thib/old.config \

--parallel 16

I check that the repository is using the right encryption with

[thib@ergaster ~]$ kopia repo status

Config file: /home/thib/.config/kopia/repository.config

Description: Repository in Filesystem: /home/thib/kopia_chacha

Hostname: ergaster

Username: thib

Read-only: false

Format blob cache: 15m0s

Storage type: filesystem

Storage capacity: 1.3 TB

Storage available: 646.3 GB

Storage config: {

"path": "/home/thib/kopia_chacha",

"fileMode": 384,

"dirMode": 448,

"dirShards": null

}

Unique ID: eaa6041f654c5e926aa65442b5e80f6e8cf35c1db93573b596babf7cff8641d5

Hash: BLAKE2B-256-128

Encryption: AES256-GCM-HMAC-SHA256

Splitter: DYNAMIC-4M-BUZHASH

Format version: 3

Content compression: true

Password changes: true

Max pack length: 21 MB

Index Format: v2

Epoch Manager: enabled

Current Epoch: 0

Epoch refresh frequency: 20m0s

Epoch advance on: 20 blobs or 10.5 MB, minimum 24h0m0s

Epoch cleanup margin: 4h0m0s

Epoch checkpoint every: 7 epochs

I could scp that repository to my Raspberry Pi, but I want to evaluate the restore performance the same conditions as before, so I create a new s3 bucket and sync the Chacha-encrypted repository to it. My repository weights about 200 GB. Pushing it to a new bucket and pulling it from the Pi will only cost me a handful of euros.

[thib@ergaster ~]$ kopia repository sync-to s3 \

--bucket=chacha \

--access-key=REDACTED \

--secret-access-key=REDACTED \

--endpoint="s3.fr-par.scw.cloud" \

--parallel 16

After it's done, I can connect to that new bucket from the Raspberry Pi, disconnect kopia from the former AES-encrypted repo and connect to the new Chacha-encrypted repo

thib@tinykube:~ $ kopia repo disconnect

thib@tinykube:~ $ kopia repository connect s3 \

--bucket=chacha \

--access-key=REDACTED \

--secret-access-key=REDACTED \

--endpoint="s3.fr-par.scw.cloud"

Enter password to open repository:

Connected to repository.

I can then check that the repo indeed uses the Chacha encryption algorithm

thib@tinykube:~ $ kopia repo status

Config file: /home/thib/.config/kopia/repository.config

Description: Repository in S3: s3.fr-par.scw.cloud chacha

Hostname: tinykube

Username: thib

Read-only: false

Format blob cache: 15m0s

Storage type: s3

Storage capacity: unbounded

Storage config: {

"bucket": "chacha",

"endpoint": "s3.fr-par.scw.cloud",

"accessKeyID": "SCWW3H0VJTP98ZJXJJ8V",

"secretAccessKey": "************************************",

"sessionToken": "",

"roleARN": "",

"sessionName": "",

"duration": "0s",

"roleEndpoint": "",

"roleRegion": ""

}

Unique ID: 632d3c3999fa2ca3b1e7e79b9ebb5b498ef25438b732762589537020977dc35c

Hash: BLAKE2B-256-128

Encryption: CHACHA20-POLY1305-HMAC-SHA256

Splitter: DYNAMIC-4M-BUZHASH

Format version: 3

Content compression: true

Password changes: true

Max pack length: 21 MB

Index Format: v2

Epoch Manager: enabled

Current Epoch: 0

Epoch refresh frequency: 20m0s

Epoch advance on: 20 blobs or 10.5 MB, minimum 24h0m0s

Epoch cleanup margin: 4h0m0s

Epoch checkpoint every: 7 epochs

I can now do a test restore

thib@tinykube:~ $ kopia restore k6303a292f182dcabab119b4d0e13b7d1 /mnt/icy/nextcloud-chacha

Restoring to local filesystem (/mnt/icy/nextcloud-chacha) with parallelism=8...

Processed 82254 (67 GB) of 118011 (233.4 GB) 23.1 MB/s (28.7%) remaining 1h59m50s

After a minute or two of restoring at 1.5 MB/s with CPUs mostly idle, the Pi starts restoring increasingly faster. The restore speed displayed by kopia very slowly rises up to 23.1 MB/s. I expected it to reach 70 or 80 MB/s at least.

The CPU doesn't look like it's going at full capacity. While the wait time remained regularly below 10%, I could see bumps of where the wa metric was going above 80% for some of the CPUs, and sometimes all at the same time.

With the Chacha encryption algorithm, it looks like the bottleneck is not the CPU anymore but the disk. Unfortunately, I can only attach a NVMe drive via an usb enclosure on my Raspberry Pi 4, so I won't be able to remove that bottleneck.

Conclusion

It was a fun journey figuring out why my Raspberry Pi 4 was too slow to restore data backed up from my VPS. I now know the value of htop when chasing bottlenecks. I also understand better how Kopia works and the importance of using encryption and hash algorithms that work well on the machine that will perform the backups and restore.

When doing a restore, the Raspberry Pi had to pull the repository data from Scaleway, decrypt the chunks from the repository, and encrypt data to write it on disk. The CPU of the Raspberry Pi is not optimized for encryption and favors power efficiency over computing power. It was completely saturated by the decryption and encryption to do.

My only regret here is that I couldn't test a Chacha-encrypted kopia repository on an encrypted disk since my Raspberry Pi refused to boot from the encrypted drive shortly after testing the random read / write speed. I could get from a restore speed in Bytes per second to a restore speed in dozens of MegaByes per second. But even without the disk encryption overhead, the Pi is too slow at restoring backups for me to use it in production.

Since I intend to run quite a few services on my server (k3s, Flux, Prometheus, kube-state-metrics, Grafana, velero, and a flurry of actual user-facing services) I need a much beefier machine. I purchased a Minisforum UM880 Plus to host it all, and now I know the importance of configuring velero and how it uses kopia for maximum efficiency on my machine.

A massive thank you to my friends and colleagues, Olivier Reivilibre, Ben Banfield-Zanin, and Guillaume Villemont for their suggestions when chasing the bottleneck.

I’m not sure if we’ll make a formal announcement for the Treasury roles, which is why I’m not naming anyone here yet.

I’m not sure if we’ll make a formal announcement for the Treasury roles, which is why I’m not naming anyone here yet. Thanks again to Bart, Jakub, Sam and Allan for all your help over the past few weeks.

Thanks again to Bart, Jakub, Sam and Allan for all your help over the past few weeks.

︎

︎